Photo by Ricardo Gomez Angel on Unsplash

Overview

A surprising amount of valuable data is locked away in PDF files. There are a variety of methods for extracting data from them, but the job is made more difficult when they contain embedded images that hold the text. We recently experimented with Google Cloud Vision API to handle the task, and have had great success.

Why PDF files?

Why, indeed. PDF files are great for printing, but in this day and age where most of the content we consume is in a browser or an app they seem to be overused. In many cases content authors may just find it simpler to publish from a program like Word directly to PDF in order to preserve formatting and such. This is fine, but it also often makes it less convenient to read the documents, and even more difficult to extract the content they contain.

For example, we’ve done quite a bit with documents published by governments, and often the data is provided in PDF files. This would include official records like deeds and mortgages, but could also be things like lists of criminal warrants.

How to get text from PDF files

Fortunately the majority of PDF files contain text that can be extracted relatively easily. That is, even though the PDF file itself is a binary document, the text within it can be selected and extracted. A quick way of determining how easily you can extract text from a PDF file is to simply try selecting it with your mouse. If you can highlight the text it’s likely you can extract it.

We’ve experimented with a variety of tools for extracting text from PDF files, and have found good old pdftohtml to be one of the most robust and reliable. It’s free and open source, and is pretty much as capable as many of its commercial cousins (e.g., ABBY). You can give pdftohtml a PDF file and it will spit back a nicely-formatted block of XML. The XML contains text as well as character positions, among other useful data.

In our case we created a web-based API that will take either a URL or a PDF file upload, and return the resulting XML from pdftohtml. This makes it simple to integrate with our screen-scraper software, as well as just about anything else you might want to use it with.

So long as the PDF file contains selectable text life is rosy. The pdftohtml binary handles it, and parsing the resulting XML is relatively simple. We’ve created our own Java-based parser for handling the XML, and something similar could be created in pretty much any other language.

Note also that there are a number of libraries you might also use to extract data in the programming language of your choice. PDFBox is a good option for Java. PDFMiner and PyPDF2 do great if you’re into Python.

What happens, though, when the text in the PDF file isn’t actually text? Many PDF files are readable, but the text they contain is actually embedded inside of images within the PDF. That is, the text can’t be easily extracted. A utility like pdftohtml, or some other PDF parser, likely won’t be able to do anything at all with it.

OCR FTW

Over the 17 years we’ve been in business we’ve tinkered here and there with various OCR (optical character recognition) packages. At one point not long ago we did an evaluation of several of them for a project we were working on. We tried ABBY, tesseract, Aspire, and others. None of them were up to our standards, as we strive for 100% data accuracy. It’s not okay for a “5” to become a “6” and for a “cl” to become a “d”.

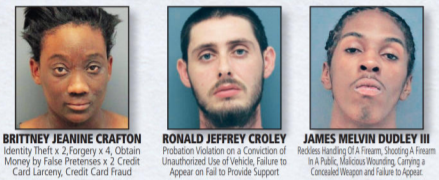

Recently we decided to give it another shot. Not long ago both Amazon and Google released cloud-based OCR services (Textract and Cloud Vision API, respectively), so we thought we’d give them a whirl with a few projects we currently have. Two of those projects deal with lists of individuals who have warrants for their arrests. Here are a couple of sample screenshots:

Obviously the text is legible, but it’s embedded inside of images, so it can’t be extracted without using OCR.

We gave tesseract another try with them, but got the same marginal results we’ve seen in the past. The commercial packages may yield the same, so we tried them with the new cloud-based offerings. We found Amazon’s Textract to give pretty good results, but, again, 100% accuracy is what we’re shooting for. Happily, Google’s Cloud Vision API gave us excellent results. The accuracy isn’t 100%, but it may be hovering around 99%. It will also likely get even more accurate over time, given the AI muscle Google likely has behind it.

Using Google Cloud Vision API

We’re primarily a Java shop, so we decided to go with the Java API that Google provides. The API returns objects that contain location data (top, bottom, left, and right coordinates) for each recognized word. With these coordinates, the task then becomes assembling them into blocks and sentences suitable for extraction.

Because we already have code to parse the XML format that pdftohtml outputs, we decided to write bridge code that would take the Java objects from Google’s API and output an XML stream that matches what pdftohtml generates. That way we could use the same code we normally do to parse the XML.

There are a few gotchas to be aware of when using the Cloud Vision API. The main issues we had were dealing with coordinates from Google that match the word location, so some words with capital letters start higher on the page than words with only lowercase letters. Likewise, some words with letters like “y” and “g” are positioned lower on the page than words without anything below the line the text is written on. Also, we found that sometimes their response gave coordinates that are slightly off, probably thinking a bit of visual noise was part of the letter. Once we had the code for merging text done we had to fiddle with the percent overlap for merging rows so it didn’t get things from above or below the current line, but also didn’t miss things that were part of it (common examples we hit were things like putting the “a” from the word “a” (as in “a” book) on the wrong line.

We also considered using the main block of text returned by Google to merge the text. This is an option you might consider for your own project, as it could simplify the process. As part of the response they return a full page block of text which seems to have been generated quite well, accounting for table cells and whatnot. We ended up not using that, though, because we wanted to have more control over where a line ends. For example, in case a file was processed that had enough text in each cell to not appear to be a table, but instead looked like a long line of text.

One other issue to be aware of when using the Cloud Vision API–according to the documentation you can send it a PDF file directly, but we received an error when attempting that. Instead, we first convert the PDF to a JPEG, then send that to the API. We use ImageMagick for that, which does a splendid job.

How to extract data from locked PDF files

There’s an addendum to all of this that’s worth noting. Some PDF files are locked, and text can’t be extracted from them without first entering a password. That is, the PDF file contains text that could be selected, but because of permissions placed on the file you won’t be able to select or copy text from it without first entering a password.

Well, here’s a little secret–you can often get around this issue by converting the PDF to a PostScript file, then back again. There are two little gems known as pdf2ps and ps2pdf that are handy in these cases. You can use them to magically turn a locked PDF into an unlocked one.

Conclusion

If you’re looking for an inexpensive way to extract text from PDF files containing images Google Cloud Vision API may very well be your best bet. It works amazingly well today, and will only get better over time.